Enhancing Conceptual Understanding and Programming Assistance

Ethical and Societal Impact Discussions

Preparation for Job Interviews

Artificial Intelligence (AI) is the vanguard of the fourth industrial revolution, transforming every industry and function across the globe. Recognizing this, the University of San Diego (USD) offers a dynamic Master of Science in Applied Artificial Intelligence (AAI) program. The program is designed to equip students with the practical skills and theoretical understanding necessary to navigate the rapidly evolving landscape of AI. Additionally, the program encourages students to adopt and utilize advanced Generative AI Tools, such as OpenAI’s ChatGPT, to enrich their learning experience and broaden their capabilities and practices in both education and career.

The MS in Applied Artificial Intelligence program at USD offers a rigorous curriculum emphasizing computational models of intelligence, machine learning, neural networks, IoT, deep learning, computer vision, natural language processing, MLOps, and AI ethical issues. With faculty renowned for their expertise and a focus on experiential learning, students are prepared to leverage Generative AI to solve complex real-world problems.

In this context, using the GPT-4 based AI model, ChatGPT, as a learning tool can be tremendously beneficial to students. ChatGPT, developed by OpenAI, is an AI language model capable of comprehending and generating human-like text. This model can provide tangible advantages to students in several ways.

GitHub Copilot

GitHub Copilot, an AI-driven code completion tool developed by OpenAI and GitHub, serves as an effective learning resource for programming students. The tool, based on the GPT-4 AI model, suggests code snippets and completions within integrated development environments (IDEs), accelerating the learning of coding languages and concepts. Copilot aids students’ understanding of syntax and programming patterns through its high-quality code examples based on industry best practices.

Moreover, Copilot enhances productivity by generating boilerplate code and reducing time spent on referencing syntax or documentation. It also helps students prevent syntax errors, suggests alternative problem-solving solutions, and offers valuable insights for advanced programming concepts, thereby boosting the quality of their code.

GitHub Copilot’s support for multiple languages expands students’ skill sets, preparing them for different development environments. It also exposes them to professional coding patterns and practices, a beneficial experience for future careers in programming.

However, while powerful, Copilot should be used responsibly. Students must actively engage with the suggested code to understand underlying programming concepts instead of relying solely on the tool. This balanced use will enable students to leverage Copilot’s capabilities and become proficient, confident programmers.

Use Case: Creating a Function

Let’s imagine you’re working on a Python project and want to create a function to calculate the factorial of a number, but you’re not exactly sure how to write it.

First, start by typing a comment or the beginning of the function to describe what you want to do. This could be something like:

As you start writing, GitHub Copilot will begin to provide suggestions for the code based on the context. It might suggest something like:

You can then accept the suggested code if it’s what you were looking to do, or you can continue typing if the suggestion isn’t quite right. Copilot will continue to adjust its suggestions based on what you type.

Remember, the suggested code should be reviewed and possibly modified based on your specific needs and understanding of the code. GitHub Copilot is a powerful tool for coding, but it’s not a substitute for understanding the logic and structure of the code you’re writing.

Enhancing Conceptual Understanding and Programming Assistance

AI, by nature, is a complex field with intricate theories and concepts. With its capacity for simplified explanations, ChatGPT can provide clear and concise insights to help students understand some of these concepts more efficiently. Moreover, it can be a valuable asset when it comes to Python programming, for example.

Use Case 1: Debugging Python Code

Consider a student working on a machine learning assignment and encountering an error they can’t resolve. The error might look something like this:

The student could input the error message into ChatGPT and ask for assistance. The AI might respond:

“This error often occurs when your dataset has missing or infinite values, or values too large for float64 to handle. Try using pandas’ isna().sum() function to check for missing values in your dataframe. If missing values are found, consider using fillna() or dropna() to handle them. Also, check for infinite values and replace or remove them appropriately.”

Let’s break down the different possible causes:

- NaN (Not a Number): NaN is a special value used to represent missing or undefined data in numerical computations. It typically arises when there is incomplete or invalid data in your dataset. Operations involving NaN values often result in NaN as well.

- Infinity: In Python, infinity (positive or negative) is represented as float(‘inf’) or float(‘-inf’). This usually happens when you perform mathematical operations that lead to infinite values, such as dividing by zero or performing other invalid mathematical calculations.

- Value too large for dtype(‘float64’): This occurs when you try to store a number that is too large to be represented using the ‘float64’ data type. The ‘float64’ type can represent a wide range of values, but it has limitations, and if you exceed them, you’ll get this error.

To debug this issue, you should check the data you are working with and identify the problematic entries. Some common approaches include:

- Check for NaN and infinity. Use functions like np.isnan() and np.isinf() in NumPy or pd.isnull() and pd.isinf() in pandas to identify the problematic entries.

- Print or display the data to identify any visible anomalies in the dataset.

- Ensure that your data is of the correct data type, especially if it contains very large values.

- If there are NaN or infinity values, decide how to handle them. You may need to impute missing values or remove rows/columns with NaN or infinity based on your analysis requirements.

- If the issue is with values too large for ‘float64’, consider scaling the data or using alternative data types (e.g., ‘float32’) if they are suitable for your analysis.

Use Case 2: Implementing Machine Learning Algorithms

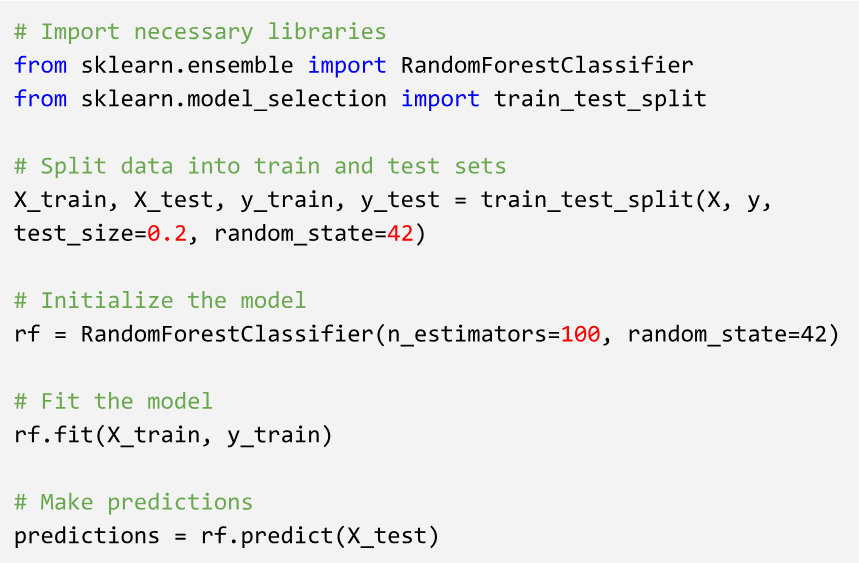

Suppose a student is trying to implement a Random Forest algorithm using scikit-learn but isn’t sure about the process. They could ask ChatGPT for guidance, and the AI might generate a step-by-step guide, including initializing the model, fitting the data, and making predictions.

Let’s break down the code and its output in more detail.

The code starts by importing the required libraries: RandomForestClassifier from sklearn.ensemble and train_test_split from sklearn.model_selection.

The code then splits the data into two sets: training set and test set. This is a common practice in machine learning to evaluate the model’s performance. The train_test_split function is used for this purpose. It takes X and y, which represent the features and corresponding labels, and splits them into four subsets: X_train, X_test, y_train, and y_test. The test_size=0.2 argument specifies that 20% of the data will be used for testing, and the rest (80%) will be used for training. The random_state=42 ensures reproducibility, as it sets a random seed for the data splitting process.

The Random Forest Classifier is initialized with the RandomForestClassifier class. It takes various hyperparameters, but here it is specified with two primary parameters:

n_estimators=100: This specifies the number of decision trees in the random forest. The value 100 indicates that the random forest will consist of 100 decision trees.

random_state=42: Similar to the previous use of random_state, this parameter sets a random seed for the random forest initialization. Setting a random seed ensures that the same results can be reproduced in the future.

The model is then trained using the training data (X_train and y_train) with the rf.fit(X_train, y_train) line of code. This process involves creating 100 decision trees (as specified by n_estimators) and training each tree on random subsets of the training data.

Once the model is trained, it is time to evaluate its performance on unseen data. The code uses the trained model (rf) to predict the labels for the test data (X_test) using the rf.predict(X_test) function. The predicted labels are stored in the predictions variable.

After running this code, you’ll have a trained Random Forest Classifier (rf) and a set of predictions (predictions). You can further evaluate the model’s performance by comparing the predicted labels (predictions) with the actual labels (y_test) from the test set. Common evaluation metrics for classifiers include accuracy, precision, recall, F1 score, and confusion matrix, among others.

Remember that the actual output of this code is not shown in the provided snippet. To see the predictions and assess the model’s performance, you would typically need to implement code to compute evaluation metrics or visualize the results.

Training ChatGPT

Training ChatGPT to better cater to the programming needs of students involves providing it with extensive, varied, and high-quality training data. This involves the following steps:

- Data Collection: Compile code snippets, bug reports, solutions, and conversations around Python programming. Make sure to include data reflecting a range of difficulties, from beginner to advanced level problems.

- Data Annotation: Label the data appropriately to ensure the model understands the context. For instance, label whether a text is a question, an error message, or a piece of code.

- Model Training: Train the model using supervised learning, where human AI trainers provide answers to programming questions or solutions to bugs. The responses given by the trainers become part of the training data.

- Evaluation and Iteration: Regularly evaluate the model’s performance and iterate the training process. This should involve both quantitative metrics (like accuracy) and qualitative analysis (like reviewing model outputs).

The application of ChatGPT in programming and Generative AI tools provides a glimpse into the future of AI-enhanced learning. It showcases how AI can be an invaluable resource, assisting in problem-solving, and accelerating the learning process for students. As models like ChatGPT continue to evolve, they will undoubtedly become increasingly embedded in AI and data science programs similar to those of the MS in Applied Artificial Intelligence (AAI) at the University of San Diego.

Research Guidance

AI is a vast field, and navigating the research landscape can be daunting. ChatGPT, with its ability to summarize and explain research papers, can make this task significantly easier. It can help students stay updated with the latest research trends and breakthroughs, guiding them to relevant research papers and simplifying complex academic language.

Ethical and Societal Impact Discussions

The ethical implications and societal impacts of AI are critical areas of discussion in the AAI program. ChatGPT and other Generative AI tools and applications can facilitate discussions, providing perspectives on different ethical dilemmas and potential societal consequences. This can stimulate a more profound understanding of the human-centric considerations of AI, which is crucial for any AI practitioner.

Preparation for Job Interviews

Job interviews in the AI field can be challenging, often encompassing a wide range of topics, from algorithms to data structures and programming languages. With ChatGPT and other Generative AI tools, students can engage in mock interviews, strengthening their technical knowledge and improving their communication skills. It can also provide advice on tackling tricky questions and presenting work experience or projects effectively.

To tailor ChatGPT to better cater to the programming needs of students, it’s essential to train it with extensive, varied, and high-quality data involving data collection, annotation, model training, evaluation, and iteration.

In conclusion, the MS in AAI program at the University of San Diego offers an enriching and comprehensive academic experience for aspiring AI and machine learning professionals. Incorporating advanced Generative AI tools like ChatGPT into the learning process not only enhances the educational experience but also provides practical skills and insights that are invaluable in the AI industry. As AI continues to evolve and impact various sectors, tools such as ChatGPT will undoubtedly become an integral part of the educational ecosystem and people’s future careers.