Artificial intelligence is becoming increasingly unavoidable in our daily lives. Industries such as healthcare, finance, communications and even education are being impacted by the rapid advancement and proliferation of AI technologies. But, because AI has vast potential to optimize efficiency and accelerate development, there is a real need to develop safeguards that guide how society uses artificial intelligence. These guidelines – better known as an AI governance framework – are aimed at improving transparency, accountability and fair use of AI.

In this blog post, we will highlight the features of AI governance, the principles of an effective AI governance policy, the challenges associated with this work and what implementing these policies will look like for years to come.

What Is AI Governance?

Generally speaking, AI governance is designed to balance the advancement of artificial intelligence with protections for people and society as a whole. More technically speaking, AI governance is defined as “the established set of guidelines, policies and practices that govern the ethical, legal and societal implications of AI and machine learning technology.”

Numerous factors go into establishing AI governance practices, including but not limited to:

- Ethical guidelines

- Regulatory parameters and laws for safe and effective use

- Risk management to ensure AI is used properly

- Transparency around AI processes, technological advancement and data usage

Why Is AI Governance Important?

Artificial intelligence poses an ethical quandary for society as a whole. While it could be the most impactful technology of our lifetimes, without effective AI governance, those impacts could be both positive and negative.

There are six primary ethical implications of AI that should be considered as we continue to build AI governance frameworks.

- Data Bias

- Privacy

- Accountability

- Job Displacement

- Transparency

- Future AI Development

AI governance is critical because it is the means by which we establish clear guidelines and standards for data privacy and security. With AI governance framework(s), organizations can demonstrate their commitment to accountability and transparency, ensuring that any misuse of data is addressed and rectified.

Who Is Responsible for AI Governance?

As it currently stands, AI governance falls to three groups: governments, private companies and academic institutions. While their work is similar, each stakeholder group leads the way in different facets of AI governance.

- Governments set the larger standards and regulations for other areas, including policy development and oversight and enforcement.

- Private Companies either manage the use and development of their own AI technology or are hired to implement it for other organizations. They hold themselves responsibleforethical standards implementation, data protection and cybersecurity, technological innovation and collaboration with different stakeholder groups.

- Academic Institutions lead the way inresearch and development, advising the other groups on effective education and training, policy advocacy and collaboration.

Together, these leaders in AI governance are helping to foster an ecosystem of balance and trust that allows AI to continue to evolve while still protecting public and private entities.

Principles of AI Governance

There are four core principles that shape AI governance – transparency, accountability, fairness and ethics.

- Transparency refers to making AI systems and how they work easier to understand. This principle helps stakeholders comprehend how AI operates, what data it uses and the rationale behind what it ultimately generates.

- AI accountability is the role of “being responsible for the actions and decisions of AI, as well as the impact AI systems have on individuals and society.” With these measures for oversight, it is easier to foster trust and protect those who could be impacted by AI.

- Fairness speaks to the steps taken to eliminate bias and discrimination in AI systems. To achieve that, data and algorithms must be examined to eliminate biases and ensure outcomes that promote equity and benefit a wide range of users.

- Ethics in AI governance requires stakeholders to evaluate the ethical parameters of AI deployment, considering issues like privacy, consent and the potential for harm. Using these tools, organizations can promote the responsible use of AI.

Global AI Governance Frameworks

An AI governance framework is a set of policies and practices designed to oversee the development, deployment and use of artificial intelligence technologies. These guidelines typically encompass regulatory parameters, risk management, monitoring and education.

There are existing AI governance frameworks in place around the world in various industries and governments. Some of those include:

- IEEE Ethical Guidelines: Developed by the Institute of Electrical and Electronics Engineers (IEEE), these guidelines focus on the importance of transparency, accountability and the need for AI systems to align with human values. They have named their framework “Ethically Aligned Design.”

- EU AI Act: The European Union AI Act calls for regulation of AI technologies based on their assigned risk level categories – minimal risk, limited risk, high risk and unacceptable risk. Each category carries its own regulatory requirements.

- OECD Principles on AI: Implemented in 2019 and updated in 2024, The Organisation for Economic Co-operation and Development (OECD) Principles on AI provide policymakers with recommendations for effective AI policies. To date, the European Union, the Council of Europe, the United States, the United Nations and other countries use OECD’s framework in their work on AI.

Challenges of AI Governance

Because of the nature of AI development, there are inherently many challenges that come with AI governance. From the rapid pace of technological advancements to the varied nature of global regulations, there is no seamless path to a universal AI governance framework.

As experts, industry leaders and government officials continue to work on establishing guidelines for AI use, they continually cite these common AI governance challenges:

- Balancing Innovation with Regulation: The fast pace of AI development can outstrip existing regulatory frameworks and governance structures, making it difficult for policymakers to keep up with innovations and potential risks. In an academic setting, for example, AI can be an invaluable resource that helps level the playing field for students with different backgrounds. Unfortunately, it can also just as easily be used for malicious purposes, such as to plagiarize.

- Ensuring Data Privacy and Security: Arguably the biggest challenge with AI governance is ensuring and maintaining data privacy and security. AI systems rely on enormous datasets that could – purposely or accidentally – access sensitive or private data. As AI tools continue to evolve, so does the need for privacy and security systems that can keep up with that level of advancement.

- Eliminating Bias and Promoting Fairness: There are inherent biases in AI systems, but detecting and removing these is equally complex and time consuming. This work is required, however, as addressing bias in AI systems is the only way to work towards fairness and equity.

Levels of AI Governance

AI is governed at the local, national and international levels — but it is critical that these groups coordinate their efforts

At a local level, AI governance speaks more to an organization’s idea of an AI governance framework. Depending on the industry, organizations need to develop policies and practices that ensure ethical, secure and proper use of AI tools and technology.

In the United States, there is no one single body that oversees AI governance at a national level. However, there are various agencies and leaders who have worked to implement regulations that deal with data privacy, AI deployment and development and other ethical considerations. It is important to note that the White House has published a blueprint for an “AI Bill of Rights” that aims to provide guidance for AI systems, biases, data privacy and more.

There is also some international collaboration when it comes to AI governance. The IEEE Ethical Guidelines, EU AI Act, and OECD Principles have developed governance frameworks that are being adopted and implemented around the globe.

Regardless of the level of AI governance, it’s important to differentiate between informal, ad hoc and formal levels of governance.

- Informal: This typically encompasses unstructured and unofficial practices that are naturally adopted by organizations or communities.

- Ad hoc: As the name implies, these are AI governance frameworks created in response to a specific prompt or challenge, and are often temporary.

- Formal: These are the formal AI rules and standards that are put in place to direct AI research, development and application to ensure safety, fairness and respect for human rights.

Examples of AI Governance

AI Governance in Healthcare

In 2020, the U.S. Food and Drug Administration (FDA) established the Digital Health Center of Excellence with three primary objectives: to connect and build partnerships to accelerate digital health advancements; to share knowledge to increase awareness and understanding, drive synergy and advance best practices; and to innovate regulatory approaches to provide efficient and least burdensome oversight while meeting the FDA standards for safe and effective products. This governance framework aims to ensure patient safety while fostering innovation in digital health technologies and AI.

AI Governance in Finance

In the U.S., the Office of the Comptroller of the Currency established the Office of Financial Technology (OFT) to regulate “financial technology areas involving bank-fintech arrangements, artificial intelligence, digital assets and tokenization, as well as other new and changing technologies and business models that affect OCC-supervised banks.”

AI Governance in Academia

A new report from MIT SMR Connections outlines strategies higher education institutions are and should be implementing to govern generative AI. For example, the University of Michigan established an AI committee that oversees AI literacy training for faculty, staff and students, and reports regularly on effective use of AI. They even established their own generative AI tools that sees thousands of users daily. At the University of Arizona and Texas A&M University, faculty are offered AI literacy lessons and the opportunity to test out various AI tools. Lastly, at the University of San Diego’s Center for Educational Excellence a resource hub was created for faculty, staff and administrators designed to guide and inform them about the use of generative AI in education.

Organizational Implementation of AI Governance

Implementing an AI governance framework at an organizational level is not a one-size-fits all solution. There are some steps, however, that many groups have utilized that have helped them successfully develop and implement their AI governance practices.

Define Objectives and Scope – Begin by identifying goals and objectives of the AI governance policies that will ensure ethical use and risk mitigation. It’s also key to determine the scope of this implementation and which systems will be on-boarded first.

Engage Stakeholders – Since this isn’t a task that can be managed by one person, it’s critical to involve a diverse group of relevant stakeholders in the process. Business leaders, legal experts, technology leads and others should be organized into committees and working groups to oversee the development of these policies.

Implement Training and Awareness Programs – Once the official parameters of the AI governance framework are established, effectively disseminating that information is the natural next step. Make training available to employees that focuses on policy and best practices, as well as promoting a culture of ethical AI use.

Monitor, Evaluate, and Adapt – An AI governance policy can only be effective if it is regularly monitored, evaluated and updated. It should be monitored for compliance, reviewed based on feedback and technology updates and revised if changes would improve its utilization and security.

Here are some of the leading organizations and government agencies with internal AI ethics committees and governance policies:

- Google’s AI Principles

- AI Now Institute

- IBM

- Vector Research Institute

- Nvidia/NeMo Guardrails

- AI Ethics Framework

The Future of AI Governance

The AI landscape – and therefore the AI governance landscape – are constantly evolving. According to a recent Forbes article, AI is expected to see an annual growth rate of 37.3% from 2023 to 2030. With such a rapid growth rate comes an increased need to establish firm governance parameters that ensures development occurs ethically and securely.

Thankfully, proactive steps are being taken. There are emerging trends in AI governance that focus on ethical frameworks, regulatory standards across industries and borders, and a commitment to transparency, security and privacy.

As these initiatives continue to gain momentum, the work to establish ethical standards and accountability will shape a more responsible AI landscape for the future.

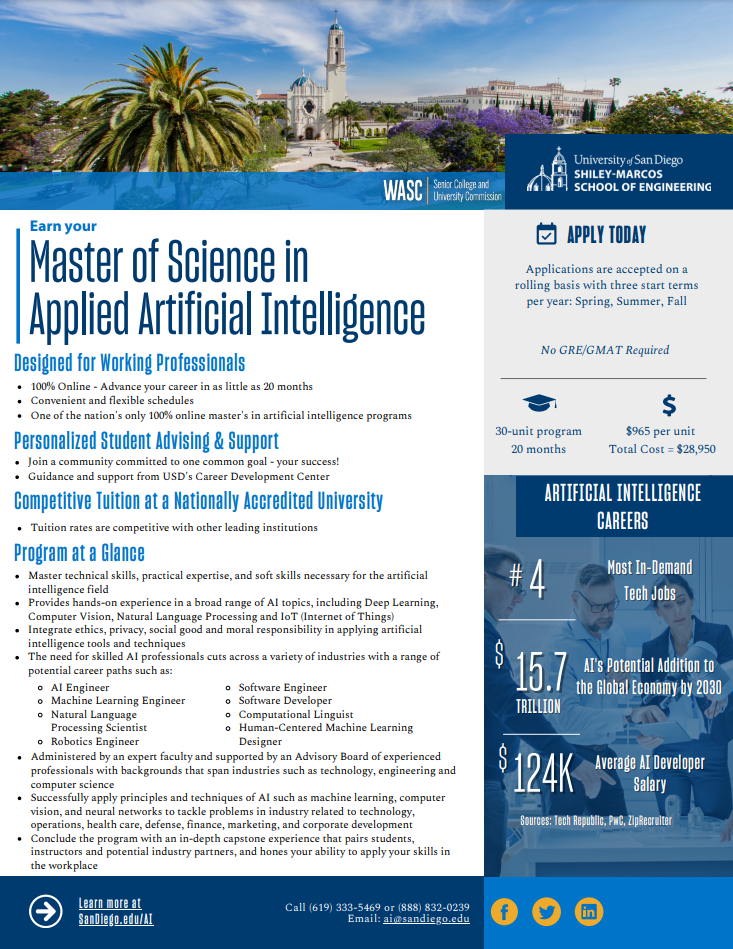

Helping to Shape the Future of AI Governance

If you’re interested in helping to shape the frameworks that govern artificial intelligence, we invite you to take a look at the University of San Diego’s Master of Science in Applied Artificial Intelligence program, which includes an Ethics in Artificial Intelligence course. Students will examine the issues mentioned here and develop a deep understanding of both the positive and negative consequences that come with the proliferation of AI tools and technology.

If you’re thinking about pursuing an advanced education in artificial intelligence, read the free eBook, “8 Questions to Ask Before Selecting an Applied Artificial Intelligence Master’s Program.”