The fields of Instructional Design (ID) and Learning Design (LD) are built around models and theories to ensure that educational content is able to address the needs of learners. Instructional design models like Analysis, Design, Development, Implementation and Evaluation (ADDIE), the Successive Approximation model (SAM) and Backwards Design are used to create learning experiences, and are then followed by an evaluation and review to refine the process and create deeper engagement with learners.

In this post, we explore one of the most popular evaluation models — the Kirkpatrick Evaluation model — and how it can be used to measure outcomes and improve learning results.

What Is the Kirkpatrick Evaluation Model?

The Four Levels of the Kirkpatrick Model

Benefits & Challenges of the Kirkpatrick Model

How to Use the Kirkpatrick Model

How Does Instructional Design Employ Evaluation Models?

What Is the Kirkpatrick Evaluation Model?

The Kirkpatrick Model of evaluation was developed by Donald Kirkpatrick in 1959 as a means of understanding how an organization’s training or educational process is functioning, and how the people within it are performing. It works by providing a model to capture and then analyze data to measure both immediate impacts and longer-term results.

Even after 60+ years, the Kirkpatrick training model continues to be the most well-known and widely used means of evaluating the effectiveness of training programs. A study published in The Institute for Employment Studies noted that the model “remains useful for framing approaches to training and development evaluation” and can be applied in the evaluation of any type of educational or interactive program.

The Kirkpatrick method works by breaking a training/education process into four distinct levels to identify the effects of the training and how well they are contributing to successful learning outcomes.

The Four Levels of the Kirkpatrick Model

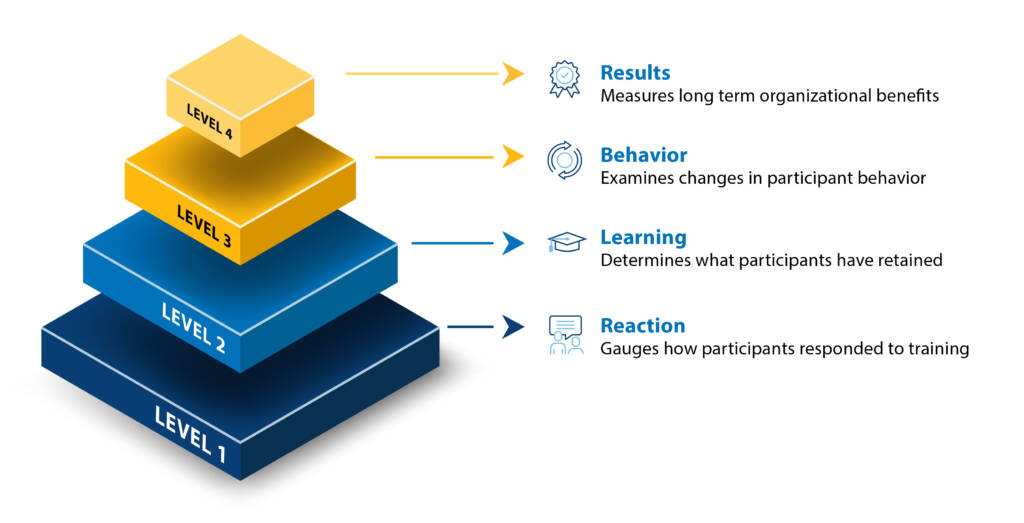

There are four levels that come in increasing order of complexity, as shown in the following image:

Data gained from the earlier levels tends to be more immediate and personal to learners, while the higher levels focus on long-term benefits that can be applied across an organization. These later levels require more complex data gathering and analysis, which makes the results more informative, but also more time-consuming and complicated to measure.

As the International Society for Educational Technology notes, this means those who use the Kirkpatrick Model of training evaluation may not progress beyond the second level due to the required time investment or budgetary restrictions.

Here’s what the levels cover and how they build on each other.

Level 1: Reaction

Kirkpatrick defines the first and most immediate evaluation as capturing how participants find a training or instruction to be “favorable, engaging, and relevant.” Educators, trainers and administrators collect reactions through short surveys provided at the end of a session, sometimes through paper “smile sheet” handouts or through one-on-one interviews. Modern evaluations often use email invitations to online surveys and digital assessment tools such as Questionmark, Qualtrics and SurveyMonkey.

A survey will ask how the participants feel about the program, whether they found it useful, and their impressions of the experience. Sometimes this data is combined with additional learner feedback provided through comment forms or direct verbal responses from participants.

This initial level requires the least amount of time and budget to administer and is helpful for determining how informative the content was and how effectively it was delivered. On the other hand, this level also provides the least useful insights, as it doesn’t offer any data on how effectively the information was synthesized or how problematic areas could be improved.

For those kinds of insights, we’ll need to progress to the higher levels.

Level 2: Learning

This level is defined as where instructors determine whether the learners managed to acquire the “intended knowledge, skills, attitude, confidence, and commitment based on their participation in the training.” If there’s a pattern in what learners struggled to understand or retain, that could point to a problem in an area of the instruction or to specific material that might be more challenging for the learners than intended.

Level 2 learning assessments can be administered via written or computer-based quizzes or tests or through direct interviews and observations. For more detailed assessment, participants may be asked to perform a task relating to the training and then judged on their level of success. Pre-tests can also be administered prior to training to more accurately measure the degree of “before and after” improvements.

Aside from evaluating the efficacy of the training, this data can also be used to determine whether participants should receive credit for the course, or if they’re eligible for licenses or certificates. While measuring learning outcomes provides an understanding of learners’ immediate and short-term gains, we need to move up another level to determine any long-term value.

Level 3: Behavior

The third level of Kirkpatrick’s training evaluation seeks to determine whether or not learners’ behaviors have changed as a result of the program. Beyond the initial impressions or immediate knowledge retention, Kirkpatrick describes this level of evaluation as offering a real opportunity for instructors to see if a training has been successful and participants can “apply what they learned during training when they are back on the job.”

Accurately measuring behavior can be challenging, as different participants are likely to manifest changes over extended periods of time. Initial successes might wane as some learners return to bad habits, while other learners may steadily improve over time as they practice their technique and refine their knowledge through repetition. For those reasons, the most effective performance metrics are the ones that can be used in occasional direct on-the-job observations and regular performance reviews — such as a system that could automatically track relevant variables, like daily sales numbers or reviews from satisfied customers.

The most comprehensive measurement of learners’ behavior comes from combining relevant metrics with regular performance reviews, as well as peer- and self-reviews. When used in coordination, these measurements provide a comprehensive “360-degree feedback” to measure all areas of a learner’s growth.

Though, if we want to measure a training’s effects on the larger organization, we’ll need to advance to the fourth and final level of the Kirkpatrick evaluation model.

Level 4: Results

According to Kirkpatrick, this level measures how the training program contributes to the success of the organization “as a result of the training and the support and accountability package.” Specifically, it attempts to measure the changes in a specific area, which can include sales, spending, customer satisfaction and overall efficiency in order to determine the return on investment (ROI).*

This final level is the most challenging one to realize, as it can require a significant investment of time and budget to accurately measure results. Making a direct correlation between a training program and changes in performance can be hard to accurately determine, as improper or incorrect data can skew results. One way to effectively measure the impact of the training would be to use a control group, and then compare performance over time between it and the training group.

When done correctly, this level of evaluation offers the most valuable data for an organization, providing insights into both how a training program has created benefits, and also how it can be improved.

* Note that some applications of the Kirkpatrick Model are designed so that ROI is separated out as its own fifth level of evaluation. For simplicity’s sake, we’re keeping our review of the model to four levels.

Benefits & Challenges of the Kirkpatrick Model

The reason the Kirkpatrick training model is still widely used is due to the clear benefits that it can provide for instructors and learning designers:

- It outlines a clear, simple-to-follow process that breaks up an evaluation into manageable models.

- It works with both traditional and digital learning programs, whether in-person or online.

- It is a highly flexible and adaptable model, making it easy to implement across different environments and fields.

- It provides instructors with a means of gathering feedback to continually improve their training programs and methods.

- It gives managers and leaders insight into the effectiveness of training programs and their overall impact on an organization.

However, there are also some challenges and concerns to be careful of when employing the Kirkpatrick Model:

- The third and fourth levels are more time-consuming to employ correctly and can be costly to implement; organizations will need to plan accordingly to take advantage of these higher levels.

- It can be difficult to directly link specific results to the effects of the training. IDs and their organizations will need to carefully design evaluation metrics to avoid drawing the wrong conclusions.

- Even with carefully developed metrics, it may not be possible to directly prove the efficacy of the program or a positive ROI without a long-term and consistent measurement of results.

- The Kirkpatrick Model is only employed after a training or instructional program is finished, which means it’s harder to adjust or improve a training that is in-progress.

How to Use the Kirkpatrick Model

To address some of the challenges with utilizing the Kirkpatrick Model and to maximize its benefits, it’s recommended to start at the highest level and work backwards. That means the first step should be to ask questions about your higher-level, long-term goals:

- What are the results that the organization is looking for?

- Which areas need to see the results?

- What are the most important benefits to achieve?

After determining the long-term goals, consider which behaviors need to be developed or encouraged in order to achieve them. As an ID or LD you should consult with subject matter experts (SMEs) and stakeholders to curate a list of what’s possible:

- What will positive changes in behavior look like?

- How will learning outcomes be accurately measured?

- What “level” of success is acceptable?

From there, determine what are the knowledge and skills that learners will need to develop to enable those positive behaviors. This will require assessing their current knowledge, experience and skill levels to determine which areas are lacking.

Finally, the training program itself will need to be evaluated, tested and revised in order to ensure that it’s accessible, relevant and enjoyable for all learners. This will help to ensure a more positive reaction to the material and that the information is understandable and easier to synthesize.

Following these instructional design principles can aid in the development of a program that is effective for your learners and beneficial for any organization.

How Does Instructional Design Employ Evaluation Models?

Instructional design seeks to provide best-practice solutions in how to bridge knowledge, skill and attitude gaps between different learners. If you’re interested in the most effective means of employing the Kirkpatrick Model — or other important evaluation models — to train and educate people in today’s digital age, consider the University of San Diego’s online Master of Science in Learning Design and Technology program.

You’ll be introduced to a very high-level overview of Kirkpatrick’s Model in our foundational course, followed by a more detailed review in our Program Design, Assessment and Evaluation course. Regardless of the evaluation model you choose to employ when designing and revising an instructional solution, mastering ID principles will help you create effective training and educational materials. Learn more about how you can master these skills and techniques at USD LDT.

![The ADDIE Model for Instructional Design [+ Pros/Cons & FAQs]](https://onlinedegrees.sandiego.edu/wp-content/uploads/2022/09/ldt_blog_addiemodel-1024x576.png)